06 May 2015

Algolia’s DNA is really about performance. We want our search engine to answer

relevant results as fast as possible.

To achieve the best end-to-end performance we’ve decided to go with JavaScript

since the total beginning of Algolia. Our end-users search using our REST API

directly from their browser - with JavaScript - without going through the

websites’ backends.

Our JavaScript & Node.js API clients were implemented 2 years ago and were now

lacking of all modern best practices:

- not following the error-first or callback-last conventions;

- inconsistent API between the Node.js and the browser implementations;

- no Promise support;

- Node.js module named algolia-search, browser module named algoliasearch;

- cannot use the same module in Node.js or the browser (obviously);

- browser module could not be used with browserify or webpack. It was exporting multiple properties directly in the window object.

This blog post is a summary of the three main challenges we faced while

modernizing our JavaScript client.

tl;dr;

Now the good news: we have a new isomorphic JavaScript API

client.

Isomorphic JavaScript apps are JavaScript applications that can run both

client-side and server-side.

The backend and frontend share the same code.

isomorphic.net

Here are the main features of this new API client:

If you were using our previous libraries, we have migration guides for both

Node.js and the

browser.

#

Challenge #1: testing

Before being able to merge the Node.js and browser module, we had to remember

how the current code is working. An easy way to understand what a code is

doing is to read the tests. Unfortunately, in the previous version of the

library, we had only one test. One test was

not enough to rewrite our library. Let’s go testing!

Unit? Integration?

When no tests are written on a library of ~1500+

LOC,

what are the tests you should write first?

Unit testing would be too close to the implementation. As we are going to

rewrite a lot of code later on, we better not go too far on this road right

now.

Here’s the flow of our JavaScript library when doing a search:

- initialize the library with

algoliasearch()

- call

index.search('something', callback)

- browser issue an HTTP request

callback(err, content)

From a testing point of view, this can be summarized as:

- input: method call

- output: HTTP request

Integration testing for a JavaScript library doing HTTP calls is interesting

but does not scale well.

Indeed, having to reach Algolia servers in each test would introduce a shared

testing state amongst developers and continuous integration. It would also

have a slow TDD feedback because of heavy network usage.

Our strategy for testing our JavaScript API client was to mock (do not run

away right now) the XMLHttpRequest object.

This allowed us to test our module as a black box, providing a good base for a

complete rewrite later on.

This is not unit testing nor integration testing, but in between. We also

planned in the coming weeks on doing a separate full integration testing suite

that will go from the browser to our servers.

faux-jax to the rescue

Two serious candidates showed up to help in testing HTTP request based

libraries

Unfortunately, none of them met all our requirements. Not to mention, the

AlgoliaSearch JavaScript client had a really smart failover request strategy:

This seems complex but we really want to be available and compatible with

every browser environment.

- Nock works by mocking calls to the Node.js http module, but we directly use the XMLHttpRequest object.

- Sinon.js was doing a good job but was lacking some XDomainRequest feature detections. Also it was really tied to Sinon.js.

As a result, we created algolia/faux-jax. It is now pretty stable and can mock XMLHttpRequest, XDomainRequest and

even http module from Node.js. It means faux-jax is an isomorphic HTTP

mock testing tool. It was not designed to be isomorphic. It was easy to add

the Node.js support thanks to moll/node-mitm.

Testing stack

The testing stack is composed of:

The fun part is done, now onto the tedious one: writing tests.

Spliting tests cases

We divided our tests in two categories:

- simple test cases: check that an API command will generate the corresponding HTTP call

- advanced tests: timeouts, keep-alive, JSONP, request strategy, DNS fallback, ..

Simple test cases

Simple test cases were written as table driven

tests:

It’s a

simple JavaScript file, exporting test cases as an

array

It’s a

simple JavaScript file, exporting test cases as an

array

Creating a testing stack that understands theses test-cases was some work. But

the reward was worth it: the TDD feedback loop is great. Adding a new feature

is easy: fire editor, add test, implement annnnnd done.

Advanced tests

Complex test cases like JSONP fallback, timeouts and errors, were handled in

separate, more advanced tests:

Here we test

that we are using JSONP when XHR fails

Here we test

that we are using JSONP when XHR fails

Testing workflow

To be able to run our tests we chose

defunctzombie/zuul.

Local development

For local development, we have an npm test task that will:

- launch the browser tests using phantomjs,

- run the Node.js tests,

- lint using eslint.

You can see the task in the

package.json. Once run it looks like this:

640 passing

assertions and counting!

640 passing

assertions and counting!

But phantomjs is no real browser so it should not be the only answer to “Is my

module working in browsers?”. To solve this, we have an npm run

dev task that

will expose our tests in a simple web server accessible by any browser:

All of theses

features are provided by defunctzombie/zuul

All of theses

features are provided by defunctzombie/zuul

Finally, if you have virtual machines, you can

test in any browser you want, all locally:

Here’s a

VirtualBox setup created with

xdissent/ievms

Here’s a

VirtualBox setup created with

xdissent/ievms

What comes next after setting up a good local development workflow? Continuous

integration setup!

Continuous integration

defunctzombie/zuul supports running

tests using Saucelabs browsers. Saucelabs provides

browsers as a service (manual testing or Selenium automation). It also has a

nice OSS plan called Opensauce. We patched

our .zuul.yml configuration file

to specify what browsers we want to test. You can find all the details in

zuul’s wiki.

Now there’s only one missing piece: Travis CI.

Travis runs our tests in all browsers defined in our .zuul.yml file. Our

travis.yml looks like this:

All platforms are tested using a travis build

matrix

All platforms are tested using a travis build

matrix

Right now tests are taking a bit too long so we will soon split them between

desktop and mobile.

We also want to to tests on pull requests using only latest stable versions of

all browsers. So that it does not takes too long. As a reward, we get a nice

badge to display in our

Github readme:

Gray color

means the test is currently running

Gray color

means the test is currently running

Challenge #2: redesign and rewrite

Once we had a usable testing stack, we started our rewrite, the V3

milestone on Github.

Initialization

We dropped the new AlgoliaSearch() usage in favor of just

algoliasearch(). It allows us to hide implementation details to our API

users.

Before:

new AlgoliaSearch(applicationID, apiKey, opts);

After:

algoliasearch(applicationID, apiKey, opts);

Callback convention

Our JavaScript client now follows the error-

first and callback-last conventions. We had to break some methods to

do so.

Before:

client.method(param, callback, param, param);

After:

client.method(params, param, param, params, callback);

This allows our callback lovers to use libraries like

caolan/async very easily.

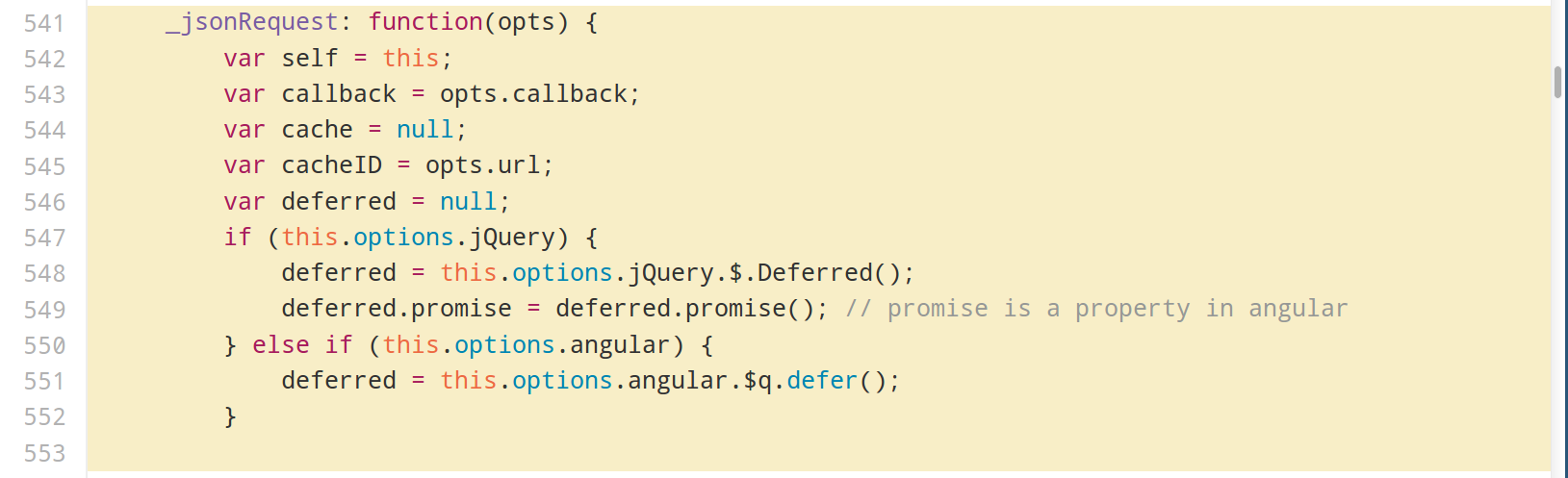

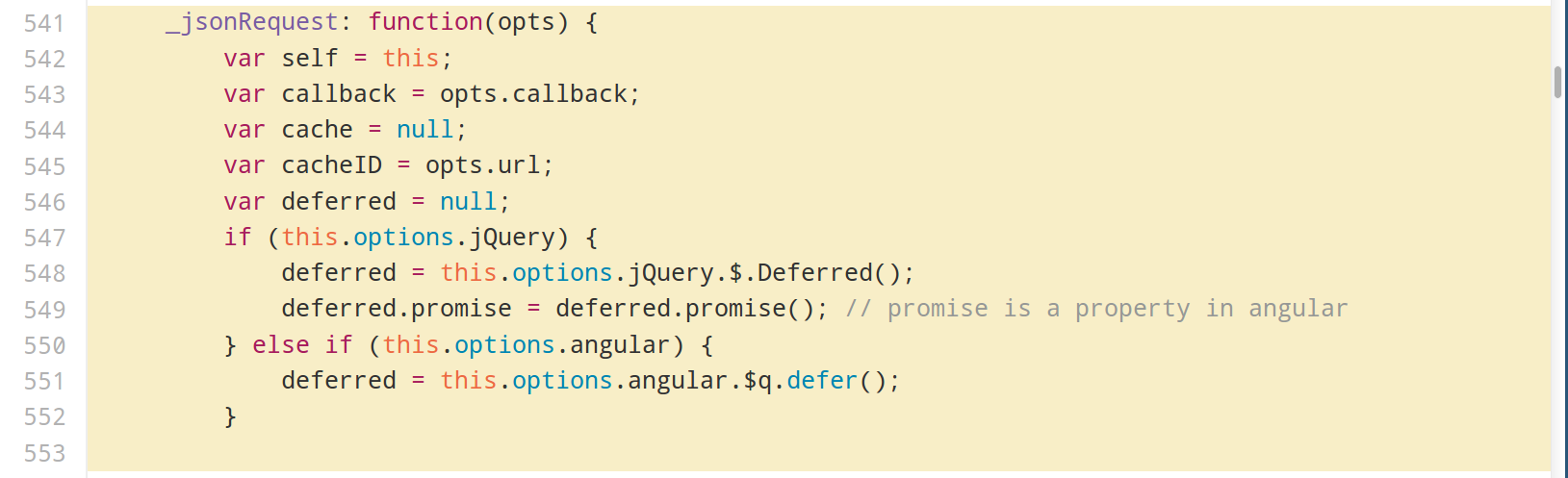

Promises and callbacks support

Promises are a great way to handle the asynchronous flow of your application.

Promise partisan? Callback connoisseur? My API now lets you switch between

the two! http://t.co/uPhej2yAwF (thanks

@NickColley!)

— pouchdb (@pouchdb) March 10,

2015

We implemented both promises and callbacks, it was nearly a no-brainer. In

every command, if you do not provide a callback, you get a Promise.

We use native promises in compatible

environments and

jakearchibald/es6-promise as

a polyfill.

AlgoliaSearchHelper removal

The main library was also previously exporting window.AlgoliaSearchHelper to

ease the development of awesome search UIs. We externalized this project and

it now has now has a new home at algolia/algoliasearch-helper-

js.

UMD

UMD: JavaScript modules that run anywhere

The previous version was directly exporting multiple properties in the

window object. As we wanted our new library to be easily compatible with a

broad range of module loaders, we made it UMD

compatible. It means our library can be used:

This was achieved by writing our code in a

CommonJS style and then use the

standalone build feature of browserify.

see

browserify usage

see

browserify usage

Multiple builds

Our JavaScript client isn’t only one build, we have multiple builds:

Previously this was all handled in the main JavaScript file, leading to unsafe

code like this:

How do we solve this? Using inheritance! JavaScript prototypal inheritance is

the new code smell in 2015. For us it was a good way to share most of the code

between our builds. As a result every entry point of our builds are inheriting

from the src/AlgoliaSearch.js.

Every build then need to define how to:

Using a simple inheritance pattern we were able to solve a great challenge.

Example of

the vanilla JavaScript build

Example of

the vanilla JavaScript build

Finally, we have a build script that

will generate all the needed files for each environment.

Challenge #3: backward compatibility

We could not completely modernize our JavaScript clients while keeping a full

backward compatibility between versions. We had to break some of the previous

usages to level up our JavaScript stack.

But we also wanted to provide a good experience for our previous users when

they wanted to upgrade:

- we re-exported previous constructors like window.AlgoliaSearch*. But we now throw if it’s used

- we wrote a clear migration guide for our existing Node.js and JavaScript users

- we used npm deprecate on our previous Node.js module to inform our current user base that we moved to a new client

- we created legacy branches so that we can continue to push critical updates to previous versions when needed

Make it isomorphic!

Our final step was to make our JavaScript client work in both Node.js and the

browser.

Having separated the builds implementation helped us a lot, because the

Node.js build is a regular build only using the http module from Node.js.

Then we only had to tell module loaders to load index.js on the server and

src/browser/.. in browsers.

This last step was done by configuring browserify in our

package.json:

the browser

field from

browserify also works in webpack

the browser

field from

browserify also works in webpack

If you are using the algoliasearch module with browserify or webpack, you

will get our browser implementation automatically.

The faux-jax library is released under MIT like all our open source

projects. Any feedback or improvement idea are welcome, we are dedicated to

make our JS client your best friend :)

20 Apr 2015

Browsing the cumbersome interfaces of government websites in the lookout for

reliable data can be a very frustrating experience. It’s full of specific

terminology and there’s not a government website that looks the same. It’s

like each time you want to use a car, you have to learn to drive all over

again.

Connect ideas with economic insight in a matter of seconds

That’s what Quadrant.io is for.

Solving the frustration anyone who makes their points with facts encounters

when routinely performing data search. It offers them with the fastest and

easiest way to find and chart economic data from trusted sources.

Acknowledging that it can quickly become a nightmare to find reliable

information scattered all over the web,

Quadrant is on a mission to shorten

any data search to seconds. So that data users can spend less time finding

data and more time analysing it.

To keep this promise, Quadrant provides data users with an intuitive platform

that aggregates more than 400,000 indicators from over 1,000 public sources,

and keep them updated in real time. A powerful search allowing any user to

find exactly what they are looking for even if they do not use economists’

jargon is a must-have functionality in such a service.

And Algolia stood out as the perfect search solution for Quadrant.

Provide a rewarding search experience to End-Users

First because of** the rewarding search experience it allows to deliver to its

users.**

Algolia surfaces data relevant to people’s search in milliseconds, showing the

most appropriate results from the very first keystroke.

It enables to search across different entry points corresponding to the

different attributes describing data series (release date, source).

That wasn’t possible with other search solutions they tested before. After

implementing Algolia, Quadrant.io received nice feedback from their customers,

saying that “search was much more comfortable, much more intuitive”.

Algolia empowers anyone to be a search expert

Second, because of the simple experience it is to deploy Algolia on their web

app. Back-end documentation and customer support was a major help: it took

them less than a week to implement instant search, including relevance

tweaking and front-end development. As Dane Vrabrac, co-founder of Quadrant.io

concluded “with Algolia, it’s awesome all the stuff I can do as a non

developer !”

Images courtesy of Quadrant.io. Learn more on their

website.

18 Feb 2015

At Algolia, we allow developers to provide a unique interactive search

experience with as-you-type search, instant faceting, mobile geo-search and on

the fly spell check.

Our Distributed Search Network aims at removing the impact of network latency

on the speed of search, allowing our customers to offer this instant

experience to all their end-users, wherever they may be.

Every millisecond matters

We are obsessed with speed and we’re not the only ones: Amazon found out that

100ms in added latency cost them 1% in sales. The lack of responsiveness for

a search engine can really be damaging for one’s

business. As

individuals, we are all spoiled when it comes to our search expectations:

Google has conditioned the whole planet to expect instant results from

anywhere around the world.

We have the exact same expectations with any online service we use. The thing

is that for anyone who is not Google, it is just impossible to meet these

expectations because of the network latency due to the physical distance

between the service backend that hosts the search engine and the location of

the end-user.

Even with the fastest search engine in the world, it can still take hundreds

of milliseconds for a search result to reach Sydney from San Francisco. And

this is without counting the bandwidth limitations of a saturated oversea

fiber!

How we beat the speed of light

Algolia’s Distributed Search Network (DSN) removes the latency from the speed

equation by replicating your indices to different regions around the world,

where your users are.

Your local search engines are clones synchronized across the world. DSN allows

you to distribute your search among 12 locations including the US,

Australia, Brazil, Canada, France, Germany, Hong Kong, India, Japan, Russia,

and Singapore. Thanks to our 12 data centers, your search engine can now

deliver search results under 50ms in the world’s top markets, ensuring an

optimal experience for all your users.

How you activate DSN

Today, DSN is only accessible to our Starter, Growth, Pro and Enterprise plan

customers. To activate it, you simply need to go in the “Region” tab at the

top of your Algolia dashboard and select “Setup DSN”.

You will then be displayed with a map and a selection of your top countries in

terms of search traffic. Just select our DSN data centers on the map and see

how performance in those countries is optimized.

Algolia will then automatically take care of the distribution and the

synchronization of your indices around the world. End-users’ queries will be

automatically routed to the closest data center among those you’ve selected,

ensuring the best possible experience. Algolia DSN delivers an ultra low

response time and automatic fail-over on another region if a region is down.

It is that simple!

Today, several services including HackerNews, TeeSpring, Product Hunt and

Zendesk are leveraging Algolia DSN to provide faster search to their global

users.

Want to find out more about the Algolia experience ?

Discover and try it here!

12 Jan 2015

Exactly a year ago, we began to power the Hacker News search engine (see our

blog post. Since then,

our HN search project has grown a lot, expanding from 20M to 25M indexed

items, and serving** from 900K to 30M searches a month**.

In addition to hn.algolia.com we’re also providing

build various readers or monitor tools and we love the applications you’re

building on top of us. The community was also pretty active on

GitHub, requesting improvements

and catching bugs… keep on contributing!

Eating our own dog food on HN search

We are power users of Hacker News and there isn’t a single day we don’t

use it. Being able to use our own engine on a tool that is so important to us

has been a unique opportunity to eat our own dog food. We’ve added a

lot of API features during the year but unfortunately didn’t have the time to

refresh the UI so far.

One of our 2015 resolutions was to push the envelope of the HN search UI/UX:

- make it more readable,

- more usable,

- and use modern frontend frameworks.

That’s what motivated us to release a new experimental version of HN

Search. Try it out and tell us what

you think!

Applying more UI best practices

We’ve learned a lot of things from the

comments of the users of the

previous version. We also took a look at all the cool

apps built on top of our API. We wanted to

apply more UI best practices and here is what we ended with:

Focus on instantaneity

The whole layout has been designed to provide an instant experience, reducing

the wait time before the actual content is displayed. It’s also a way to

reduce the number of mouse clicks needed to access and navigate through the

content. The danger with that kind of structure can be to end up with a

flickering UI where each user action redraw the page, activating unwanted

behaviors and consuming a huge amount of memory.We focused on a smooth

experience. Some of the techniques used are based on basic performance

optimizations but in the end what really matters for us is the user’s

perception of latency between each interactions, more than objective

performance. Here are some of the tricks we applied:

- Toggle comments: we wanted the user to be able to read all the comments of a story on the same page, our API on top of Firebase allowed us to load and display them with a single call.

- Sticky posts: in some cases we are loading up to 500 comments, we wanted the user to be able to keep the information of what he is reading and easily collapse it, so we decided to keep the initial post on top of the list.

- Lazy-loading of non-cached images: when you are refreshing the UI for each request you don’t want every thumbnail to flick on the UI when loading. So we applied a simple fade to avoid that. But there is actually no way to know if an image is already loaded or not from a previous query. We manage to detect that with a small timeout.

- Loading feedback: the most important part of a reactive UI is to always give the user a feedback on the state of the UI. We choose to add this information with a thin loading bar on top of the page.

- Deferring the load of some unnecessary elements: this one is about performance. When you are displaying about 20 repeatable items on each keypress you want them as light as possible. In our case we are using Angular.js with some directives which were too slow to render. So we ended up rendering them only if the user interact with them.

- Cache every requests: It’s mainly about the backspace key. When a user want to modify his query by removing some characters you don’t want to make him wait for the result: that’s cached by the Algolia JS API client.

Focus on readability

We’ve learned a lot from your comments while releasing our first HN Search

version last year. Readability of the search results must be outstanding to

allow you to quickly understand why the results are retrieved and what they

are about. We ended up with 2 gray colors and 2 font weights to ease the

readability without being too distracting.

Stay as minimal as possible

If you see unnecessary stuffs, please tell us. We are not looking for the most

‘minimal’ UI but for the right balance between usability and minimalism.

Sorting & Filtering improvements

Most HN Search users are advanced users. They know exactly what they are

searching for and want to have the ability to sort and filter their results

precisely. We are now exposing a simple way to either sort results by date or

popularity in addition to the period filtering capabilities we already had.

We thought it could make a lot of sense to be able to read the comments of a

story directly from the search result page. Keeping in mind it should be super

readable, we went for indentations & author colored avatars making it really

clear to understand who is replying.

Search settings

Because HN Search users are advanced users, they want to be able to customize

the way the default ranking is working. So be it, we’ve just exposed a subset

of the underlying settings we’re using for the search to let you customize it.

Front page

Since Firebase is providing the official API of Hacker News, fetching the

items currently displayed on the front page is really easy. We decided to pair

it with our search, allowing users to search for hot stories & comments

through a discreet menu item.

Starred

Let’s go further; what about being able to star some stories to be able to

search in them later? You’re now able to star any stories directly from the

results page. The stars are stored locally in your browser for now. Let us

know if you find the feature valuable!

Contribution

As you may know, the whole source code of the HN Search website is open-source

and hosted on GitHub. This new version is still based on a Rails 4 project and

uses Angular.js as the frontend framework. We’ve improved the README to help

you being able to contribute in minutes. Not to mention: we love pull-

requests.

Now is starting again the most important part of this project, user testing. We count on you to bring us the necessary information to make this search your favorite one.

Wanna test?

To try it, go to our experimental version of HN Search, go to “Settings”, and enable the new style:

Want to contribute?

It’s open-source and we’ll be happy to get your feedback! Just use GitHub’s

issues to report any idea you

have in mind. We also love pull-requests :)

Source code: https://github.com/algolia/hn-search

23 Dec 2014

By working every day on building the best search engine, we’ve become obsessed

with our own search experience on the websites and mobile applications we use.

We’re git addicts and love using GitHub to store every single idea or project

we work on. We use it both for our private and public repositories (12 API

clients, HN

Search or various

d

e m o

s. We use every day its search

function and we decided to re-build it the way we thought it should be. We’re

proud to share it with the community via this Chrome

extension. Our Github Awesome Autocomplete

enables a seamless and fast access to GitHub resources via an as-you-type

search functionality.

Install your Christmas Gift now!

Features

The Chrome extension replaces GitHub’s search bar and add autocomplete

capabilities on:

How does it work?

We continuously retrieve the most watched repositories and the last active

users using GitHub Archive dataset. Users and

repositories are stored in 2 Algolia indices: users and repositories. The

queries are performed using our JavaScript API

client and the

autocomplete menu is based on Twitter’s

typeahead.js library.

The underlying Algolia account is replicated in 6 regions using our

DSN feature, answering every query in 50-100ms

wherever you are (network latency included!). Regions include US West, US

East, Europe, Singapore, Australia & India.

Exporting the records from GitHub Archive

We used GitHub’s Archive dataset to export top repositories and last active

users using Google’s BigQuery:

;; export repositories

SELECT

a.repository_name as name,

a.repository_owner as owner,

a.repository_description as description,

a.repository_organization as organization,

a.repository_watchers AS watchers,

a.repository_forks AS forks,

a.repository_language as language

FROM [githubarchive:github.timeline] a

JOIN EACH

(

SELECT MAX(created_at) as max_created, repository_url

FROM [githubarchive:github.timeline]

GROUP EACH BY repository_url

) b

ON

b.max_created = a.created_at and

b.repository_url = a.repository_url

;; export users

SELECT

a.actor_attributes_login as login,

a.actor_attributes_name as name,

a.actor_attributes_company as company,

a.actor_attributes_location as location,

a.actor_attributes_blog AS blog,

a.actor_attributes_email AS email

FROM [githubarchive:github.timeline] a

JOIN EACH

(

SELECT MAX(created_at) as max_created, actor_attributes_login

FROM [githubarchive:github.timeline]

GROUP EACH BY actor_attributes_login

) b

ON

b.max_created = a.created_at and

b.actor_attributes_login = a.actor_attributes_login

Configuring Algolia indices

Here are the 2 index configurations we used to build the search:

Repositories

Users

#

Want to contribute?

It’s open-source and we’ll be happy to get your feedback! Just use GitHub’s

issues to

report any idea you have in mind. We also love pull-requests :)

Source code: https://github.com/algolia/github-awesome-

autocomplete

Install it now: [Github Awesome Autocomplete on Google Chrome Store

FREE]

Or just want to add an instant search in your website / application?

Feel free to create a 14-days FREE trial at

http://www.algolia.com and follow one of our step

by step tutorials at

https://www.algolia.com/doc/tutorials

It’s a

simple JavaScript file, exporting test cases as an

array

It’s a

simple JavaScript file, exporting test cases as an

array Here we test

that we are using JSONP when XHR fails

Here we test

that we are using JSONP when XHR fails 640 passing

assertions and counting!

640 passing

assertions and counting! All of theses

features are provided by defunctzombie/zuul

All of theses

features are provided by defunctzombie/zuul Here’s a

VirtualBox setup created with

xdissent/ievms

Here’s a

VirtualBox setup created with

xdissent/ievms All platforms are tested using a travis build

matrix

All platforms are tested using a travis build

matrix Gray color

means the test is currently running

Gray color

means the test is currently running see

browserify usage

see

browserify usage Example of

the vanilla JavaScript build

Example of

the vanilla JavaScript build the browser

field from

browserify also works in webpack

the browser

field from

browserify also works in webpack